The internet has grown from being just a communication medium to becoming a marketplace, an entertainment source, a news centre, and much more. At any given moment, there are thousands of gigabytes of information travelling across the planet. But all of this comes to a standstill when the internet shuts down. An internet shutdown is a government-enforced blanket restriction on the use of internet in a region for a particular period of time. The reasons vary from a law and order situation to a dignitary visiting the place. There is a requirement for an analysis into whether such shutdowns can be justified, even on the direst of grounds.

These shutdowns can be initiated with little effort, as far as the authorities are concerned, because the Internet Service Providers (ISPs) do not hesitate to follow government ‘directives’. The justifications provided by them can range from being possibly reasonable to being absurd. For example, in February, the Gujarat government blocked mobile internet services across the state because the Gujarat State Subsidiary Selection Board was conducting exams to recruit revenue accountants. This was done given the “sensitive nature of the exam” and that it was “necessary to do so to prevent misuse of mobile phones.” This step is very clearly disproportional in terms of actions and effects. There are other methods through which the exam officials can stop malpractice in exams, with stopping mobile internet over the entire state being not only inefficient, but also highly disruptive to the general populace. This distinction as to whether the step is proportional gets complicated when the government justifies shutting down the internet on grounds of law and order situations or national security.

Before we answer these questions, we need to first probe into the very foundation on which a democracy functions – discourse. The very nature of democratic discourse necessitates the need to have information. When there is lack of information, public discourse loses its functionality, as the participants’ understanding will not be enough to provide targeted solutions to the specific problems to be addressed. The internet has now become one of the most important mediums for information dissemination, with the ability to provide ground level data about the people where the conventional media cannot enter or does not want to. It acts as a medium for those sections of the society, which are normally outside the purview of the mainstream, to be able to raise their voice for general public to hear. By virtue of this, it becomes an important tool in furtherance of the democratic process – the right of free speech and expression. Keeping this extremely important function of the internet in mind we can now analyse the problem of internet shutdowns.

The following questions must be answered to even consider shutting down the internet: first, whether the problem is so huge that it becomes necessary to take such an extreme step; second, whether the government has considered other alternatives, even if the problem is big enough; third, whether the functioning of a major communication channel will benefit or harm the general population and; fourth, whether there are enough safeguards to ensure that the government does not abuse this power that has been provided.

The concept of shutdowns even when there is a valid justification can be problematic. When the unruly sections are using a few limited channels to spread hate and rumours the government can shut down those specific channels and contain the situation instead of shutting the entire internet accessability down, which affects the businesses and lives of millions of innocent parties. This also reduces the collateral damage that can take place from shutting down websites which are harmless or even more tangibly systems which banks run on. Despite all of this if a blanket restriction is required, the question arises as to who should be able to put it. Section 144 of the Criminal Procedure Code has been employed here, however its validity has been called into question multiple times.

There are enormous free speech implications of not letting people make use of an important communication channel. Earlier the conventional media were the sole sources of information for the general public, making them the gatekeepers of information. These organisations though free to a great extent can be influenced by the government to not attack it directly or not report certain atrocities by instilling a fear of some sort of sanction. With the advent of internet enabled communication channels, each and every individual could contribute to the broader pool of information. By shutting down the internet, the government is cutting off the information at the source about the situation. This leads to concerns related to accountability as there is little ground-level data about the atrocities or any excessive use of force used by the law enforcement authorities.

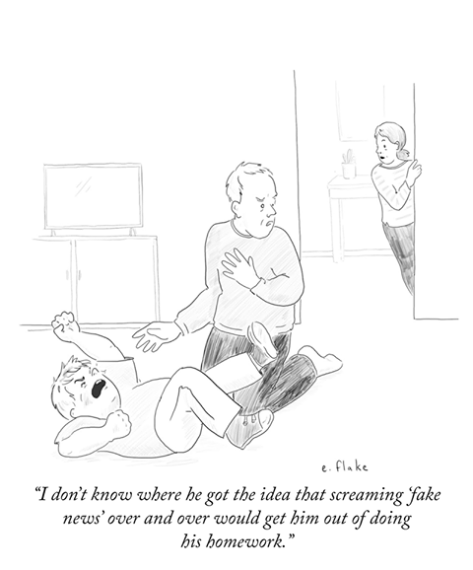

Furthermore, when there is a law and order, for example in cases in Gujarat government shut down during the Patidar movement situation it becomes very important that the people do not get misled by fake news and rumours, and the internet could prove to be a very useful tool to fight fire with fire. The government can use the same channels to reach out to the public and reduce the amount of confusion. For example, during the Cauvery Riots the Bangalore City Police effectively used Twitter and Facebook to dispel rumours and instil a sense of security among the people. Not letting an average citizen participate and engage with the other individuals and the state during tough times further alienates them. The safety of the loved ones during these situations is the top most priority of the general populace. The internet serves as a medium to communicate with them and during internet shutdowns the access to this is cut off. This only leads to further chaos and unrest, and thus shutting down accessibility is counterproductive.

One of the most significant and tangible damages that the blocking of internet does is to business establishments. According to Brookings institute, the damage that is done due to internet shutdowns is $968 million from July 2015 to June 2016 in India. Banks are largely dependent on the internet to conduct their daily transactions, and face massive problems during a shutdown. The infrastructure that is required in using debit and credit cards, ATM’s and internet banking work on the power on internet. In addition, this affects the brick-and-mortar stores as a significant number of them have started to move towards using digital payment modes post demonetisation. Needless to say, the most immediate impact is faced by e-commerce websites who by the very nature of their activities are reliant on internet.

There is also a more insidious side to this. When an easier measure like cutting access to a communication medium is used to address a broader societal complication, it is only a surface level step of cutting off of engagement on that issue. The move of shutting down the internet is only a highly publicised step, which makes it seem like the shutdown is a part of a bigger set of measures being used to tackle the situation. This creates an illusion where the actual problem continues to persist. The state will continue to use only coercive power to deal with it. Targeted measures which would provide for much better long-term solution are not taken into consideration due to lack of political will or simply lethargy on the part of establishment. In addition, this points to the wider issue of a lack of understanding both of the actual issues at hand and the manner in which the internet works, in terms of how interconnected the populace is with the internet and thus the effect it has on the entire society.

As more and more people start joining the internet and the government starts pushing towards a digitised economy, it becomes all the more necessary to not shut the internet down. The local, state and national governments need to take responsibility for public disorder and engage with the issue at hand. The state needs to start balancing the interests of national security and also protection of individual rights.

The Internet Freedom Foundation has launched a campaign to address this very issue- support them by going to keepusonline.in and signing the petition for the government to make regulations, so as to reduce arbitrariness while imposing these shutdowns.